Overview

What is Reassure

Reassure, formerly known as rn-perf-tool, is an automated tool contracted to be developed by

Callstack to help with performance testing in order to validate

performance optimisation by benchmarking the before and after changes to rendering times of UI

elements in our native apps.

More information about reassure can be found at https://www.callstack.com/open-source/reassure

How is it implemented in our app

Reassure by default uses Jest's file matcher with value <rootDir>/**/*.perf-test.[jt]s?(x).

Performance tests are "expensive" as opposed to unit tests, therefore not every scenario would require a corresponding performance test. The initial areas of the app codebase where Reassure tests have been implemented are as follows:

- App Startup (at the app level)

- Home (at the screen level)

- Racing (at the screen level)

- Sports (at the screen level)

However, these tests are preliminary and mostly designed to test the first render time. For better evidence of performance changes, developers can extend the tests to measure component re-render times, as well as TTI (Time to Interactive) which is a key metric that acts as a truthful indicator of a "smooth" interactive experience for our native apps' end users.

How do we use it to test performance when making code changes in the project

Performance testing remains an afterthought during new feature development work.

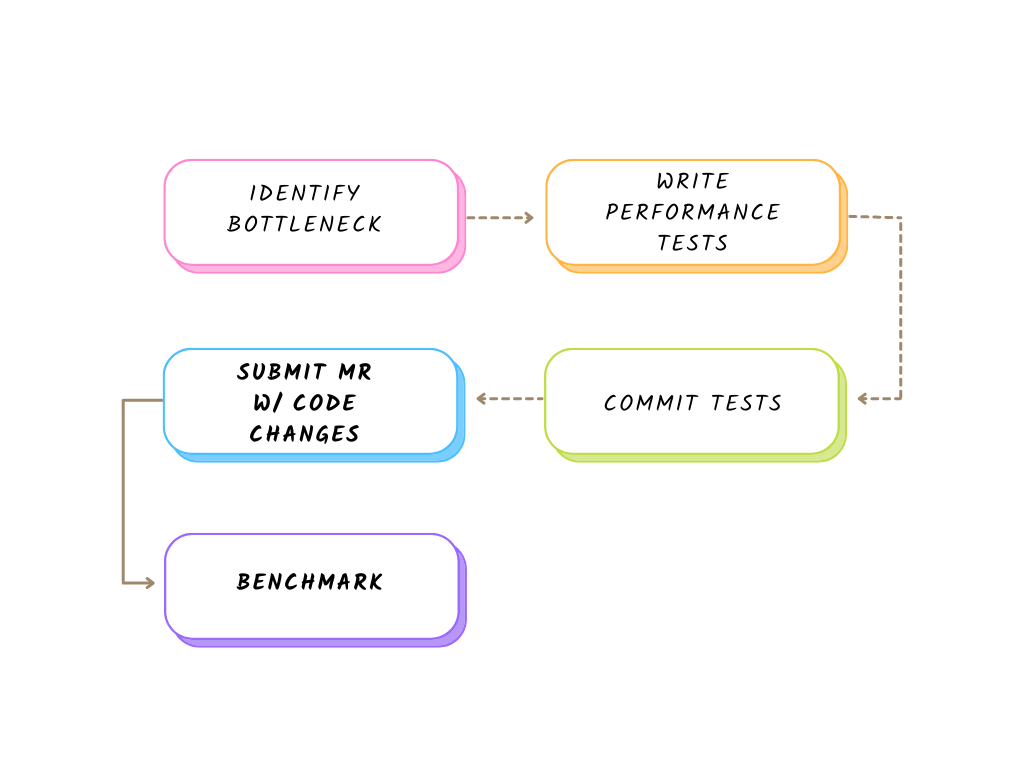

When working on a new feature, read this guide, starting from the Identify bottleneck section, to Commit tests. This will ensure that testing the performance impacts of code changes, and creating new or updating existing performance test files ensures new changes don't introduce performance regressions.

1. Identify bottleneck

Performance tests are requirements driven, whether it's a bottleneck identified through user testing or a planned JIRA story. It's important to articulate the desired outcome of the test, e.g. "swiping a race card should complete re-rendering in X ms".

2. Write performance tests

With a clear requirement and outcome, the next step is to model it with an automated test. Continuing with the example, the scenario that we need to model here is "swiping a race card", and the benchmark is "time to complete re-rendering". To measure the performance effectively, we also need to make sure to isolate this scenario and keep all other variables controlled.

For an actual guide on writing a test with Reassure, continue reading the next section.

3. Commit tests

Reassure works by comparing code changes in the current branch, with the base branch or stable version of our code (the origin/main branch). It is a preliminary step to commit the test file to the repository, which will serve as the baseline.

4. Submit MR with code changes

Once we have a reference point from which we can measure for any performance impacts, Reassure will run performance tests in our CI Pipeline, by integrating with our Danger bot, as a plugin. This allows Reassure to automatically output the performance comparison benchmark in the newly submitted MR, as an addition to the MR comment that Danger bot creates.

5. Benchmark

By analysing the performance comparison benchmark results, we can make a decision on whether the MR can be approved, and whether the performance requirements (if any) have been met.

Example reassure report

| Name | Render Duration | Render Count |

|---|---|---|

| RacingHome Screen | 584.8 ms → 521.5 ms (-63.3 ms, -10.8%) 🟢 | 1491 → 1356 (-135, -9.1%) 🟢🟢 |

Show details

| Name | Render Duration | Render Count |

|---|---|---|

| RacingHome Screen | Baseline Mean: 584.8 ms Stdev: 25.9 ms (4.4%) Runs: 636 603 598 590 588 584 578 570 562 539 Current Mean: 521.5 ms Stdev: 24.3 ms (4.7%) Runs: 576 554 525 514 512 511 509 507 506 501 | Baseline Mean: 1491 Stdev: 47.94 (3.2%) Runs: 1499 1440 1462 1438 1529 1459 1459 1586 1507 1531 Current Mean: 1356 Stdev: 45.88 (3.4%) Runs: 1383 1413 1361 1395 1249 1361 1361 1361 1363 1313 |